For Regular Latest Updates Please Follow Us On Our WhatsApp Channel Click Here

Last updated on October 7th, 2024 at 02:21 am

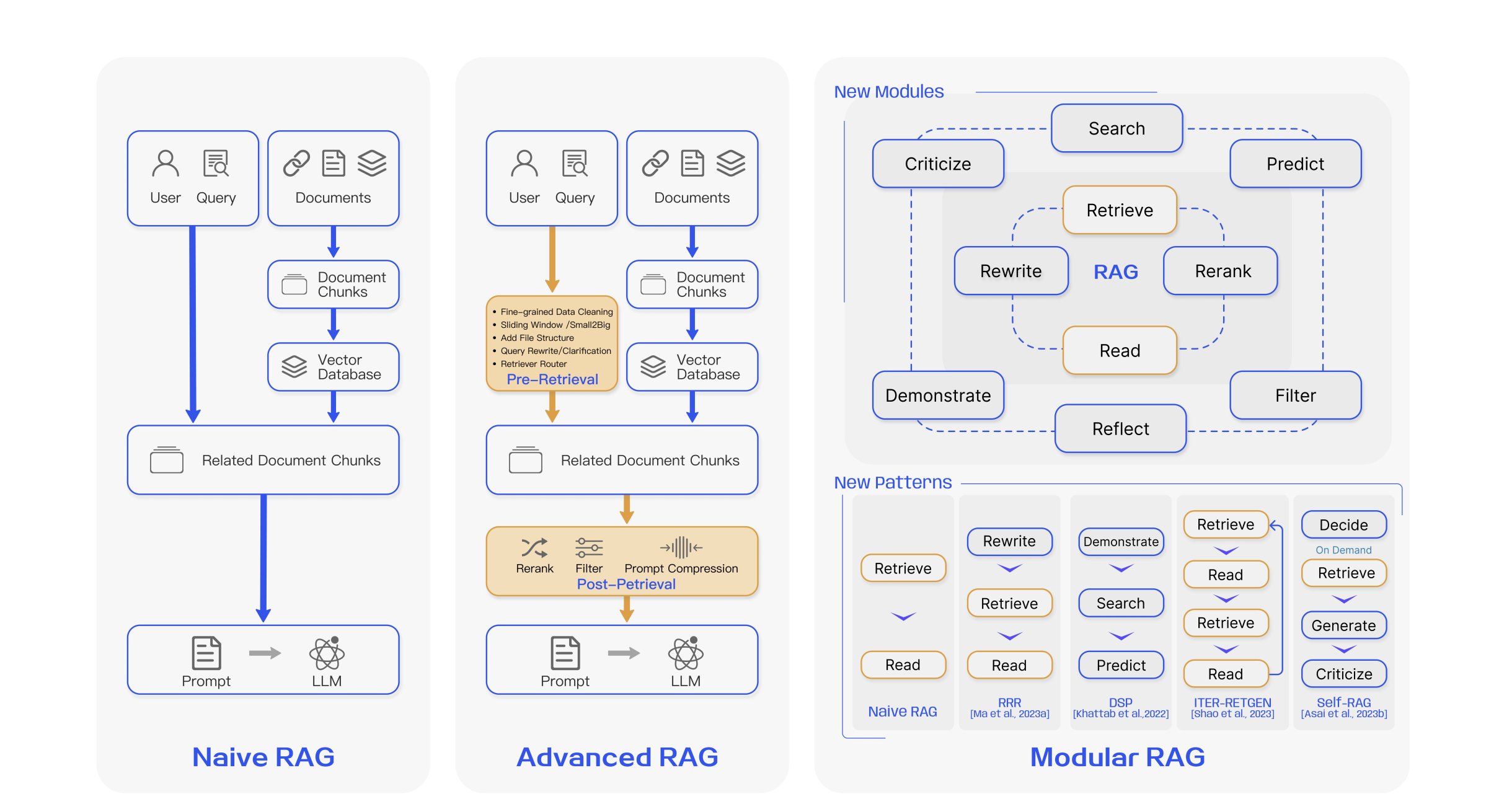

Building the Best RAG-based LLM Applications is the process of creating

- highly performant,

- scalable, and

- cost-effective, RAG-based LLM applications that:

- Utilize LLMs to generate high-quality accurate, immediate & fast responses for user queries.

Building a RAG based LLM application needs the following steps:

1. Develop a RAG-based LLM application from scratch:

For Regular Latest Updates Please Follow Us On Our WhatsApp Channel Click Here

This involves creating a model that can retrieve and generate responses based on the retrieved information.

Develop a RAG-based LLM application from scratch:

Utilize a transformer-based model like BERT or GPT for your language model.

For RAG, use a retriever model (e.g., DPR) to retrieve relevant documents and then use the language model to generate responses.

Implement an API to take user queries and return model-generated responses.

Example (using Hugging Face’s Transformers library):

python

# Install necessary libraries

pip install transformers

# Implement a basic RAG-based LLM

from transformers import RagTokenizer, RagRetriever, RagModel

tokenizer = RagTokenizer.from_pretrained(“facebook/rag-token-base”)

retriever = RagRetriever.from_pretrained(“facebook/rag-token-base”)

model = RagModel.from_pretrained(“facebook/rag-token-base”)

# Implement function to generate response

def generate_response(query):

input_ids = tokenizer(query, return_tensors=”pt”)[“input_ids”]

docs = retriever(query)

outputs = model(input_ids, docs[“input_ids”])

response = tokenizer.batch_decode(outputs[“output”], skip_special_tokens=True)

return response[0]

# Example usage

user_query = “Tell me about artificial intelligence”

response = generate_response(user_query)

print(response)

2. Scale the major workloads across multiple workers with different compute resources: This includes tasks such as loading, grouping, representing, indexing, and serving.

Scale major workloads across multiple workers:

Use a distributed computing framework like Apache Spark or Dask to parallelize and distribute tasks.

Deploy your application on a cloud platform and configure load balancing.

Pass the query to the embedding model:

Use a pre-trained embedding model like Word2Vec or FastText.

3. Pass the query to the embedding model: This is done to semantically represent it as an embedded query vector.

Pass the query through the model to get an embedded vector.

Example (using Gensim for Word2Vec):

python

from gensim.models import Word2Vec

# Train or load a Word2Vec model

model = Word2Vec(sentences, vector_size=100, window=5, min_count=1, workers=4)

# Embed a query

query_embedding = model.wv[‘your_query’]

4. Pass the embedded query vector to our vector database: This allows us to retrieve the top-k relevant contexts, which are measured by the distance between the query embedding and the embedded chunks in our knowledge base.

Pass the embedded query vector to our vector database:

Use a vector database like Faiss or Annoy to efficiently retrieve similar vectors.

Example (using Faiss):

python

import faiss

# Index the embedded vectors

index = faiss.IndexFlatL2(embedding_size)

index.add(embedded_vectors)

# Query the index

D, I = index.search(query_embedding, k=top_k)

5. Pass the query text and the retrieved context text to the LLM: The LLM will generate a response using the provided content.

Use the RAG-based LLM from step 1.

Example: See step 1.

6. Evaluate different configurations of our application: This helps to optimize for both per-component (e.g., retrieval_score) and overall performance (e.g., quality_score).

Design a routing algorithm that dynamically decides whether to route a query to an open-source model or a closed LLM based on factors like cost and performance.

7. Implement a hybrid agent routing approach: This is done between open-source software and closed LLMs to create the most performant and cost-effective application.

Implement a hybrid agent routing approach:

Routing logic: Develop logic to route queries to appropriate agents based on criteria like complexity or cost.

8. Serve the application in a scalable and available manner: This ensures that the application can handle a large number of requests.

Serve the application in a scalable and available manner:

Deploy your application on a container orchestration platform like Kubernetes.

Use auto-scaling to handle varying loads.

Employ redundant services and load balancing for high availability.

9. Learn how various methods like fine-tuning, prompt engineering, lexical search, reranking, data flywheel, etc. impact our application’s performance: This helps to improve the application over time.

Learn how various methods impact the application’s performance:

Set up experiments to test different configurations and methods.

Collect and analyze performance metrics.

Iterate on your models and configurations based on the results.

Note: The examples provided are simplified and may need adjustments based on your specific requirements and the libraries/frameworks you are using.

Remember, building things from scratch helps you understand the pieces better. Once you do, using a library makes more sense.

Breakdown of its key objectives:

1. Enhanced Performance and Scalability:

Load Distribution: Distributing major workloads across multiple workers with different compute resources ensures efficient handling of tasks like data loading, processing, and serving, preventing bottlenecks and optimizing resource utilization.

Hybrid Agent Routing: Strategically combining open-source software and closed LLMs strikes a balance between performance and cost, providing flexibility in model selection based on specific needs and constraints.

Scalable Architecture: Serving the application in a highly scalable and available manner guarantees its ability to handle large volumes of requests without compromising performance or uptime.

2. Optimized Knowledge Retrieval:

Semantic Query Representation: Transforming queries into embedded query vectors using an embedding model enables meaningful comparison with stored knowledge, ensuring accurate retrieval of relevant information.

Vector Database: Using a vector database for efficient retrieval of top-k relevant contexts based on query embedding similarity promotes accurate and context-aware responses.

3. High-Quality Response Generation:

Contextual Response Generation: LLM leverages both the query text and retrieved-context to generate more comprehensive and contextually relevant responses.

4. Continuous Improvement:

Systematic Evaluation: Evaluating different configurations optimizes performance at both component and system levels, identifying areas for improvement and fine-tuning.

Advanced Techniques: Exploration of methods like fine-tuning, prompt engineering, lexical search, reranking, and data flywheels enables ongoing refinement of the application’s effectiveness.

5. Deeper Understanding Through Building:

Hands-on Learning: The process emphasizes the value of building components from scratch to gain a deeper understanding of their interactions and nuances, fostering informed decisions about when to leverage external libraries.

In essence, this process aims to construct a robust and efficient LLM-powered application capable of delivering accurate, informative, and context-aware responses to user queries, while emphasizing continuous optimization and learning for ongoing improvement.

[Also Read: Zoho LLM: India’s AI Challenge to ChatGPT and Google]

RAG-based LLM Applications Limitations And Mitigation Strategies

Limitations of RAG-based LLM Applications

Information Capacity: Base LLMs are only aware of the information they’ve been trained on and will fall short when required to know information beyond that.

Processing Speed: The generative LLMs have to process the content in a sequence. The longer the input, the slower the processing speed.

Reasoning Power: RAG applications are often topped with a generative LLM, which gives users the impression that the RAG application must have high-level reasoning ability. However, because the LLM has inadequate input compared to the perfect model, in the same way, the RAG applications don’t have the same level of reasoning power.

Mitigation Strategies for RAG-based LLM Applications

Fine-tuning and Prompt Engineering: Methods like fine-tuning, prompt engineering, lexical search, reranking, data flywheel, etc. can impact the application’s performance. Fine-tuning customizes a pre-trained LLM for a specific domain by updating most or all of its parameters with a domain-specific dataset.

Scaling: Major workloads (load, chunk, embed, index, serve, etc.) can be scaled across multiple workers with different compute resources.

Security Controls: The lack of security controls in RAG-based LLM applications can pose risks if not addressed properly. Understanding the security implications of RAG and implementing appropriate controls can help harness the power of LLMs while safeguarding against potential vulnerabilities.

These strategies can help overcome the limitations and enhance the performance of RAG-based LLM applications. However, it’s important to note that the effectiveness of these strategies can vary depending on the use case and implementation. It’s always recommended to evaluate different configurations of the application to optimize for both per-component and overall performance.

RAG-based LLM Applications Examples And Their Use Cases:

Here are some examples of RAG-based LLM applications and their use cases:

1. Question Answering Systems: One of the most common applications of RAG-based LLMs is in building question-answering systems. These systems can answer questions based on a specific external knowledge corpus. For instance, AWS demonstrated a solution to improve the quality of answers in such use cases over traditional RAG systems by introducing an interactive clarification component using LangChain. The system engages in a conversational dialogue with the user when the initial question is unclear, asks clarifying questions, and incorporates the new contextual information to provide an accurate, helpful answer.

2. Chatbots: Incorporating LLMs with chatbots allows the chatbots to automatically derive more accurate answers from company documents and knowledge bases. This can significantly improve the efficiency and effectiveness of customer service operations.

3. Documentation Assistant: Anyscale built a RAG-based LLM application that can answer questions about Ray, a Python framework for productionizing and scaling ML workloads. The goal was to make it easier for developers to adopt Ray and to help improve the Ray documentation itself.

These examples illustrate how RAG-based LLMs can be used to build intelligent systems that can interact with users in a more meaningful and context-aware manner. They extend the utility of LLMs to specific data sources, thereby augmenting the LLM’s capabilities.

FAQs On Rag Applications And Rag-Based Applications:

Conclusion

Finally, RAG-based LLM Applications have a long way to go for further refinement which is a continuous process for every individual till his or her requirement and satisfaction. Wishing You All A Happy RAG-based LLM Applications Time.

For Any Further Help For A Free POC, You May Get In Touch With Abacus AI As Per the Offer By Their CEO Ms. Bindu Reddy

Wishing You All A Happy RAG-based LLM Applications Building Time.

What are rag applications?

RAG stands for Retrieval-Augmented Generation. It’s a technique that combines the strengths of two AI approaches:

Information retrieval:

This part finds relevant information from specific sources, like your company’s documents, databases, or even real-time feeds. Think of it as a super-fast and accurate librarian.

Text generation:

This is where the LLM comes in. It takes the retrieved information and uses its language skills to generate informative, comprehensive, and even creative responses, tailored to the specific context.

How do I create a rag application?

Let’s dive into the fascinating world of Retrieval Augmented Generation (RAG) applications! 🚀

Firstly, it’s crucial to grasp the concept. RAG is a unique blend of information retrieval and a seq2seq generator. It’s like adding a personal touch to the prompt you feed into a large language model with your own data!

Now, let’s talk about the key components. You’ll need a corpus, which is a fancy term for a collection of documents. Then, there’s the user input – that’s where you come in! And finally, a way to measure how similar the user input is to the documents in the corpus.

Next, roll up your sleeves for some setup work. You’ll need to install some components to create the perfect environment for your project.

Once that’s done, it’s time to flex your coding muscles and develop some utility functions. These will be the backbone of your application, tailored to fit its needs like a glove.

But wait, there’s more! You’ll also need to construct a sidebar. This will be your control panel for uploads and configuration settings.

And last but not least, the main script. This is where the magic happens, integrating the RAG processes within a chat user interface.

Remember, there’s no substitute for building things from scratch when it comes to understanding the nuts and bolts. But once you’ve got that down, using a library can save you a lot of time.

And that’s it! You’re all set to create your very own RAG application. Don’t forget, the internet is your friend – there are plenty of resources out there if you need more detailed guides or tutorials. Happy coding! 😊

What is the rag approach in LLM?

Think of RAG as a two-step process:

The librarian (information retrieval) gathers relevant materials.

The writer (text generation) uses those materials to craft a custom response.

The result? LLMs that are smarter, more accurate, and more helpful than ever before.

How do you implement a rag for an LLM?

Implementing RAG for an LLM involves setting up a system that:

Identifies the LLM’s task or question.

Searches relevant data sources for related information.

Provides the retrieved information to the LLM as additional context.

The LLM then uses this context to generate its response.

This might involve building custom pipelines, choosing the right retrieval models, and fine-tuning the LLM for your specific data and tasks.

What are the benefits of RAG applications:

The benefits of RAG applications are:

More accurate and reliable responses: LLMs are less prone to hallucinations or factual errors when they have access to real-world data.

Improved domain-specific knowledge: RAG applications can be tailored to specific industries or fields, making LLMs true experts in their domains.

Personalized experiences: LLMs can access user data and preferences to generate highly personalized responses and recommendations.

Real-time insights: RAG applications can integrate with live data feeds, allowing LLMs to provide up-to-date information and analysis.

What are the practical applications of LLM?

The possibilities are limitless, but here are some examples:

Customer service chatbots:

Imagine chatbots that can access your customer history and product information to provide personalized support and answer complex questions accurately.

Legal research assistant:

LLMs can analyze legal documents, case law, and regulations to help lawyers research cases and prepare arguments more efficiently.

Medical diagnosis and treatment:

LLMs can analyze patient data and medical literature to suggest diagnoses, treatment options, and even personalized care plans.

Financial analysis and reporting:

LLMs can analyze market trends, company financials, and news to generate accurate reports and investment recommendations.

RAG applications are still evolving, but they represent a significant leap forward in LLM technology. By combining the power of information retrieval with the creativity and fluency of text generation, RAG is opening up a world of possibilities for businesses and individuals alike.

What is the use of rag LLM?

Retrieval-Augmented Generation, or RAG, is a technique that enhances the performance of Large Language Models (LLMs). It does this by consulting an external, authoritative knowledge base before generating a response. This process allows the model to provide more accurate and relevant information.

RAG is particularly useful in addressing some of the challenges associated with LLMs. For instance, LLMs can sometimes produce inaccurate or outdated information, or they may rely on non-authoritative sources. By using RAG, these issues can be mitigated as it retrieves information from reliable, pre-selected knowledge sources.

One of the key advantages of RAG is its cost-effectiveness. It allows LLMs to access specific domains or an organization’s internal knowledge base without the need for retraining. This makes it a practical solution for improving the output of LLMs across various contexts.

Furthermore, RAG has several applications. It enables language models to access the most recent information for generating reliable outputs. The evidence retrieved through RAG can enhance the accuracy, control, and relevance of the LLM’s responses.

In conclusion, RAG is an essential tool for keeping LLMs up-to-date with the latest, verifiable information, thereby reducing the need for constant retraining and updates. It is a significant advancement in the field of generative artificial intelligence technology.

What is an example of a rag application?

Here are a few examples of Retrieval-Augmented Generation (RAG) applications:

Customized Suggestions:

RAG systems can be employed to scrutinize customer data, such as previous purchases and feedback, to formulate tailored product suggestions.

Corporate Intelligence:

Businesses can utilize RAG systems to examine competitor activities and market tendencies, which assists in making informed business decisions.

Content Comparison:

A large-scale application of RAG is seen in the ‘See Similar Post’ feature on platforms like Twitter. Here, the RAG system segments and stores posts in a vector database. When a user selects ‘see similar posts’, a query retrieves analogous posts and forwards them to an LLM to ascertain which posts bear the most resemblance to the original.

Streaming Services:

RAG applications can be employed to suggest more suitable movies on streaming services based on the user’s viewing history and ratings.

These instances demonstrate the versatility of RAG in enhancing the performance of Large Language Models across various sectors, resulting in more precise and pertinent outputs.

What are the applications of LLM?

LLMs (Large Language Models) have a wide range of applications across various sectors. Here are a few key examples:

Translation:

LLMs have the capability to convert written content from one language to another.

Cybersecurity:

In the realm of cybersecurity, LLMs can be utilized for malware analysis. For instance, SecPaLM, a cybersecurity LLM developed by Google, is employed to scrutinize and elucidate script behaviors to ascertain their maliciousness.

Content Generation:

LLMs have the potential to create diverse written content, encompassing blogs, articles, short stories, summaries, scripts, questionnaires, surveys, and social media posts.

Search Functionality:

LLMs can serve as an alternative search tool, delivering more contextually relevant results.

Natural Language Processing:

LLMs are instrumental in natural language processing tasks, which include language translation, text summarization, text classification, and question-and-answer dialogues.

Healthcare:

In the healthcare sector, LLMs can be employed to analyze medical records, assist in diagnosis, and provide medical information.

Robotics:

In robotics, LLMs can be utilized for tasks such as understanding and generating natural language instructions.

Code Generation:

LLMs can be employed to generate code, making them beneficial in software development.

Chatbots and Virtual Assistants:

LLMs are revolutionizing applications in chatbots and virtual assistants, reshaping the way we interact with technology and access information.

These instances demonstrate the versatility of LLMs in enhancing the performance of various tasks, resulting in more precise and pertinent outputs.

What is rag used for?

Retrieval-Augmented Generation (RAG) is a technique that enhances the performance of Large Language Models (LLMs). It does this by consulting an external, authoritative knowledge base before generating a response. This process allows the model to provide more accurate and relevant information.

Accuracy Enhancement:

RAG amalgamates the advantages of retrieval-based and generative models, leading to responses that are more accurate and contextually relevant.

Contextual Understanding:

RAG exhibits a profound understanding of queries by retrieving and incorporating pertinent knowledge from a knowledge base, resulting in more precise responses.

Bias and Misinformation Mitigation:

The reliance of RAG on verified knowledge sources helps to alleviate bias and curtail the dissemination of misinformation, compared to purely generative models.

Versatility:

RAG can be deployed for various natural language processing tasks, such as answering questions, powering chatbots, and generating content, making it a versatile tool for language-related applications.

Facilitating Human-AI Collaboration:

RAG can support humans by providing valuable insights and information, enhancing the collaboration between humans and AI systems.

Progress in AI Research:

RAG represents a significant progression in AI research by integrating retrieval and generation techniques, pushing the frontiers of natural language understanding and generation.

In summary, RAG is utilized to enhance the accuracy, relevance, and versatility of natural language processing tasks, while also addressing challenges related to bias and misinformation.

What does RAG mean for LLM?

In the realm of Artificial Intelligence, Retrieval-Augmented Generation (RAG) is a significant advancement. It’s a framework that bolsters the capabilities of Large Language Models (LLMs). How does it do this? By pulling in facts from an external database, it ensures the LLM is grounded in the most accurate and current information available.

Here’s an interesting fact: LLMs understand the statistical relationships between words, but they don’t necessarily grasp their meanings. This is where RAG comes into play. It enhances the quality of responses generated by LLMs by grounding the model in external knowledge sources. This supplements the LLM’s internal information representation, ensuring access to the most up-to-date and reliable facts.

One of the key benefits of RAG is that it reduces the need for continuous model training on new data and parameter updates as situations change. This can significantly reduce both computational and financial costs, particularly in enterprise settings where LLM-powered chatbots are used.

To put it simply, RAG empowers LLMs to leverage a specialized knowledge base to answer questions more accurately. It’s akin to the difference between taking an open-book exam versus a closed-book exam. In a RAG system, the model answers a question by perusing the content in a book, rather than trying to recall facts from memory. It’s a fascinating development in the field of AI, wouldn’t you agree?

What is the difference between LLM RAG and fine-tuning?

Retrieval-Augmented Generation (RAG) and Fine-Tuning are two distinct strategies employed to optimize Large Language Models (LLMs). Each has its unique approach and offers specific advantages.

RAG is a framework that enhances LLMs by connecting them to external knowledge sources. It’s like giving the model a library card! This allows the model to pull in relevant, up-to-date information, enhancing the accuracy of its responses.

LLMs, interestingly, understand the statistical relationships between words but not their actual meanings. RAG steps in here, supplementing the LLM’s internal information with knowledge from external sources. This ensures the model’s responses are grounded in the most current and reliable facts.

Another advantage of RAG is its efficiency. It reduces the need for continuous model training and parameter updates, which can be computationally and financially demanding. This is particularly beneficial in enterprise settings where LLM-powered chatbots are in use.

Fine-tuning, on the other hand, is all about customization. It adapts the general language model to perform specific tasks more effectively. There are two types of Fine-Tuning: Language Modeling Task Fine-tuning, which focuses on next token prediction, and Supervised Q&A Fine-tuning, which improves the model’s performance in question-answering tasks.

While RAG and Fine-Tuning might seem like different ends of a spectrum, they can actually complement each other. Fine-tuning can correct errors by adapting the model with specific data, while RAG provides access to dynamic external data sources and offers transparency in response generation.

In conclusion, the choice between RAG and Fine-Tuning depends on your specific needs. If you need access to external information, RAG might be your best bet. If you need to adapt models to specific tasks using labeled data, Fine-Tuning could be the way to go. And in many cases, a combination of both can significantly enhance model performance. It’s all about finding the right balance!

What is the rag concept in LLM?

Retrieval-Augmented Generation (RAG) is a cutting-edge technique that enhances the performance of Large Language Models (LLMs) by integrating external knowledge bases into their response generation process.

LLMs, known for their ability to generate unique content across various tasks, are powered by billions of parameters trained on extensive data. RAG takes this a step further, allowing LLMs to access specific domains or an organization’s internal knowledge without retraining.

The significance of RAG lies in its ability to create AI bots capable of answering user queries in diverse contexts. It does this by cross-referencing authoritative knowledge sources. This not only guides the LLM to retrieve relevant information from trusted sources but also gives organizations more control over the text output.

RAG offers several advantages for an organization’s generative AI initiatives. It’s a cost-effective way to introduce new data to the LLM, ensuring access to the most up-to-date, reliable facts. Users can also check the model’s sources for accuracy, fostering trust.

In essence, RAG acts like a research assistant for AI, quickly referencing the latest data before responding. It improves LLM responses by grounding them on real, trustworthy information. It fetches updated and context-specific data from an external database, making it available to an LLM. This makes RAG a valuable tool in the world of AI and machine learning.

What are rag techniques? / What is the rag technique in LLM?

Retrieval-Augmented Generation (RAG) techniques are innovative strategies that boost the capabilities of Large Language Models (LLMs) by incorporating external knowledge bases.

LLMs, recognized for their proficiency in generating unique content for a variety of tasks, are driven by billions of parameters trained on extensive datasets. RAG enhances this process by enabling LLMs to tap into the specific domains or an organization’s internal knowledge without the need for retraining.

Key RAG techniques include:

Indexing:

This initial step in the RAG process involves the extraction, cleaning, and standardization of data into plain text. This text is then segmented into smaller units, known as chunks. Each chunk is converted into a numeric vector or embedding using an embedding model, and an index is created to store these chunks and their corresponding embeddings.

Retrieval:

At this stage, the user’s query is transformed into a vector representation using the same embedding model. The system then calculates similarity scores between the query vector and the vectorized chunks, retrieving the top K chunks that are most similar to the user’s query.

Generation:

The user’s query and the retrieved chunks are input into a prompt template. The augmented prompt, obtained from the previous steps, is then fed into the LLM.

Chunking Strategy:

In the realm of natural language processing, “chunking” refers to the division of the text into small, meaningful ‘chunks’. A RAG system more quickly and accurately locates relevant context in smaller chunks of text than in larger documents.

Re-Ranking and Query Transformations:

These strategies are used to overcome the limitations of RAG systems. They help improve RAG performance by building a RAG system that answers questions about specific entries.

Hierarchies and Multi-hop Reasoning:

These are advanced techniques used when implementing RAG, which involve exploring the concepts of chunking, query augmentation, hierarchies, multi-hop reasoning, and knowledge graphs.

The effectiveness of these techniques largely depends on the quality and structure of the data being used. In summary, RAG techniques are a valuable asset in the field of AI and machine learning, enhancing the performance and capabilities of LLMs.

What are the best practices for rag?

Retrieval-Augmented Generation (RAG) is a potent technique that amplifies the abilities of Large Language Models (LLMs). Here are some of the best practices for implementing RAG:

Data Preparation:

The foundation of any successful RAG implementation is high-quality data. Ensure your data is clean, relevant, and well-structured.

Regular Updates:

RAG’s strength lies in its ability to keep the model updated with the latest data. Regularly update your knowledge sources to ensure the model has access to the most current information.

Output Evaluation:

Regularly assess the performance of your RAG system and make necessary adjustments. This is crucial for the success of your model.

Continuous Improvement:

Always strive for improvement. Experiment with different strategies and techniques to enhance the performance of your RAG system.

End-to-End Integration:

Ensure seamless integration of RAG into your existing systems. This includes effective MLOps practices like continuous integration and continuous deployment (CI/CD), robust monitoring, and regular model auditing.

Choose the Right Knowledge Sources:

Choose knowledge sources that are quite relevant to your sphere or domain and provide correct and up-to-date information.

Fine-tune your LLM:

Improve the performance of your LLM by fine-tuning it for your specific domain.

Remember, the effectiveness of these practices largely depends on the quality and structure of the data being used. These practices can help you bridge the gap between basic setups and production-level performance.

What is rag reranking?

Reranking, a vital component of the Retriever-Augmented Generation (RAG) pipeline, is a process that enhances the initial document retrieval outcomes.

In the RAG pipeline, the primary step involves document retrieval. Here, a retriever model extracts pertinent documents from an extensive corpus based on a specific query. However, the initial retrieval might not always yield the most relevant documents.

This is where the reranking process steps in. The reranker model rearranges the initially retrieved documents, ranking them based on their relevance to the query. This is achieved by factoring in additional features or employing a more sophisticated model that can better grasp the subtleties of the query and the documents.

The objective of reranking is to ensure the utilization of the most relevant documents for generating the final response during the question answering step. This significantly enhances the accuracy of the RAG pipeline, particularly when dealing with extensive and complex document corpora. It serves as a secondary filtration layer, boosting the precision of the document retrieval process. Therefore, reranking in RAG essentially focuses on optimizing and refining the document selection for the final response generation.

What is the purpose of reranking steps in a rag pipeline?

The Retriever-Augmented Generation (RAG) pipeline employs a two-pronged approach, encompassing document retrieval and question answering. The reranking phase in the RAG pipeline serves to fine-tune the outcomes of the initial retrieval phase.

Here’s a more granular breakdown:

Document Retrieval:

The first phase involves the retriever model, which, given a query (such as a question), extracts a set of pertinent documents from a vast corpus.

Reranking:

Post the initial retrieval, the reranking phase is initiated. This phase is pivotal as the initial retrieval may not always yield the most relevant documents. The reranker model rearranges the initially retrieved documents based on their relevance to the query. This is achieved by considering additional features or employing a more intricate model that can better comprehend the subtleties of the query and the documents.

Question Answering:

The final phase involves the generator model, which utilizes the reranked documents to generate a response to the query.

In essence, the reranking phase ensures the utilization of the most relevant documents for generating the final response, thereby enhancing the accuracy of the RAG pipeline. It serves as a secondary filtration layer to boost the precision of the document retrieval process. This is especially beneficial when dealing with large and intricate document corpora where the initial retrieval may not be highly accurate.

Additional Resources:

For Regular Latest Updates Please Follow Us On Our WhatsApp Channel Click Here